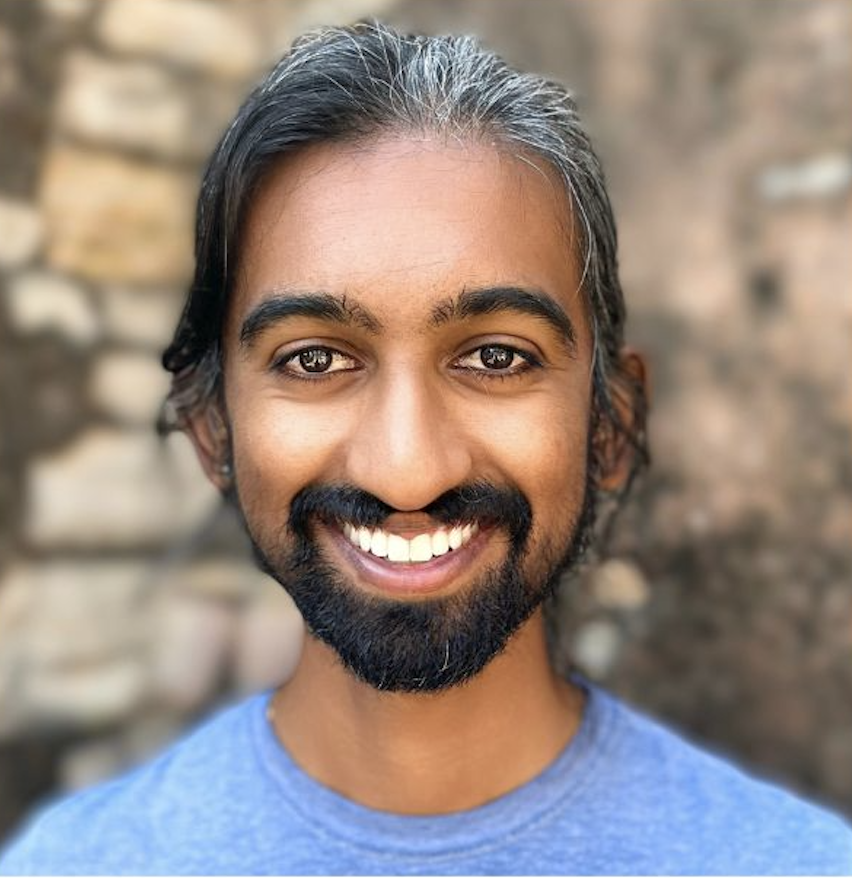

Suchin Gururangan

I am a research scientist at Meta GenAI, on the Llama team. I received my PhD in Computer Science in 2024 at the University of Washington. I was supported by the 2022 Bloomberg PhD Fellowship, and was previously a visiting researcher at Meta AI and a predoctoral resident at AI2.

📥 Email

🧑🏾💻 Github

🎓 Google Scholar

📚 Semantic Scholar

𝕏 Twitter

✍🏾 Blog

Publications

2024

| The Llama 3 Herd of Models Llama Team |

code |

| DataComp-LM: In search of the next generation of training sets for language models Jeffrey Li, Alex Fang, Georgios Smyrnis, Maor Ivgi, Matt Jordan, Samir Gadre, Hritik Bansal, Etash Guha, Sedrick Keh, Kushal Arora, Saurabh Garg, Rui Xin, Niklas Muennighoff, Reinhard Heckel, Jean Mercat, Mayee Chen, Suchin Gururangan, Mitchell Wortsman, Alon Albalak, Yonatan Bitton, Marianna Nezhurina, Amro Abbas, Cheng-Yu Hsieh, Dhruba Ghosh, Josh Gardner, Maciej Kilian, Hanlin Zhang, Rulin Shao, Sarah Pratt, Sunny Sanyal, Gabriel Ilharco, Giannis Daras, Kalyani Marathe, Aaron Gokaslan, Jieyu Zhang, Khyathi Chandu, Thao Nguyen, Igor Vasiljevic, Sham Kakade, Shuran Song, Sujay Sanghavi, Fartash Faghri, Sewoong Oh, Luke Zettlemoyer, Kyle Lo, Alaaeldin El-Nouby, Hadi Pouransari, Alexander Toshev, Stephanie Wang, Dirk Groeneveld, Luca Soldaini, Pang Wei Koh, Jenia Jitsev, Thomas Kollar, Alexandros G. Dimakis, Yair Carmon, Achal Dave, Ludwig Schmidt, Vaishaal Shankar |

code |

| Language models scale reliably with over-training and on downstream tasks Samir Yitzhak Gadre, Georgios Smyrnis, Vaishaal Shankar, Suchin Gururangan, Mitchell Wortsman, Rulin Shao, Jean Mercat, Alex Fang, Jeffrey Li, Sedrick Keh, Rui Xin, Marianna Nezhurina, Igor Vasiljevic, Jenia Jitsev, Alexandros G. Dimakis, Gabriel Ilharco, Shuran Song, Thomas Kollar, Yair Carmon, Achal Dave, Reinhard Heckel, Niklas Muennighoff, Ludwig Schmidt |

code |

| LESS: Selecting Influential Data for Targeted Instruction Tuning Mengzhou Xia, Sadhika Malladi, Suchin Gururangan, Sanjeev Arora, Danqi Chen |

code |

| Breaking the Curse of Multilinguality with Cross-lingual Expert Language Models Terra Blevins, Tomasz Limisiewicz, Suchin Gururangan, Margaret Li, Hila Gonen, Noah A. Smith, Luke Zettlemoyer |

|

| AboutMe: Using Self-Descriptions in Webpages to Document the Effects of English Pretraining Data Filters Li Lucy, Suchin Gururangan, Luca Soldaini, Emma Strubell, David Bamman, Lauren Klein, Jesse Dodge |

code |

2023

| OpenLM Suchin Gururangan*, Mitchell Wortsman*, Samir Yitzhak Gadre, Achal Dave, Maciej Kilian, Weijia Shi, Jean Mercat, Georgios Smyrnis, Gabriel Ilharco, Matt Jordan, Reinhard Heckel, Alex Dimakis, Ali Farhadi, Vaishaal Shankar, Ludwig Schmidt *Equal Contribution |

code |

| Time is Encoded in the Weights of Finetuned Language Models Kai Nylund, Suchin Gururangan, Noah A. Smith |

code |

| SILO Language Models: Isolating Legal Risk in a Nonparametric Datastore Sewon Min*, Suchin Gururangan*, Eric Wallace, Hannaneh Hajishirzi, Noah A. Smith, Luke Zettlemoyer *Equal Contribution ICLR 2024, RegML 2024 ✨Outstanding Paper Award at RegML 2024 Workshop✨ |

code |

| Scaling Expert Language Models with Unsupervised Domain Discovery Suchin Gururangan*, Margaret Li*, Mike Lewis, Weijia Shi, Tim Althoff, Noah A. Smith, Luke Zettlemoyer *Equal Contribution JMLR 2024 |

code |

| Editing Models with Task Arithmetic Gabriel Ilharco, Marco Tulio Riberio, Mitchell Wortsman, Suchin Gururangan, Ludwig Schmidt, Hannaneh Hajishirzi, Ali Farhadi ICLR 2023 |

code |

2022

| lo-fi: distributed fine-tuning without communication Mitchell Wortsman, Suchin Gururangan, Shen Li, Ali Farhadi, Ludwig Schmidt, Michael Rabbat, Ari S. Morcos TMLR |

code |

| M2D2: A Massively Multi-Domain Language Modeling Dataset Machel Reid, Victor Zhong, Suchin Gururangan, Luke Zettlemoyer EMNLP 2022 |

code |

| Whose Language Counts as High Quality? Measuring Language Ideologies in Text Data Selection Suchin Gururangan, Dallas Card, Sarah K. Dreier, Emily K. Gade, Leroy Wang, Blarry Wang,Luke Zettlemoyer, and Noah A. Smith EMNLP 2022 |

code |

| kNN-Prompt: Nearest Neighbor Zero-Shot Inference Weijia Shi, Julian Michael, Suchin Gururangan, and Luke Zettlemoyer EMNLP 2022 |

code |

| Branch-Train-Merge: Embarrassingly Parallel Training of Expert Language Models Margaret Li*, Suchin Gururangan*, Tim Dettmers, Mike Lewis, Noah A. Smith, and Luke Zettlemoyer *Equal Contribution |

code |

| Time Waits for No One! Analysis and Challenges of Temporal Misalignment Kelvin Luu, Daniel Khashabi, Suchin Gururangan, Karishma Mandyam, and Noah A. Smith NAACL 2022 |

code |

| DEMix Layers: Disentangling Domains for Modular Language Modeling Suchin Gururangan, Mike Lewis, Ari Holtzman, Noah A. Smith, and Luke Zettlemoyer NAACL 2022 |

code |

2021

| All That’s ‘Human’ Is Not Gold: Evaluating Human Evaluation of Generated Text Elizabeth Clark, Tal August, Sofia Serrano, Nikita Haduong, Suchin Gururangan, and Noah A. Smith ACL 2021 ✨Outstanding Paper Award✨ |

|

| Expected Validation Performance and Estimation of a Random Variable’s Maximum Jesse Dodge, Suchin Gururangan, Roy Schwartz, Dallas Card, and Noah A. Smith |

|

| Detoxifying Language Models Risks Marginalizing Minority Voices Albert Xu, Eshaan Pathak, Eric Wallace, Suchin Gururangan, Maarten Sap, and Dan Klein NAACL 2021 |

2020

| RealToxicityPrompts: Evaluating Neural Toxic Degeneration in Language Models Sam Gehman, Suchin Gururangan, Maarten Sap, Yejin Choi, and Noah A. Smith EMNLP Findings 2020 |

code |

| Don’t Stop Pretraining: Adapt Language Models to Domains and Tasks Suchin Gururangan, Ana Marasović, Swabha Swayamdipta, Kyle Lo, Iz Beltagy, Doug Downey, and Noah A. Smith ACL 2020 ✨Honorable Mention for Best Overall Paper✨ |

code |

2019

| Variational Pretraining for Semi-supervised Text Classification Suchin Gururangan,Tam Dang, Dallas Card, and Noah A. Smith ACL 2019 |

code |

| Show Your Work: Improved Reporting of Experimental Results Jesse Dodge, Suchin Gururangan, Roy Schwartz, Dallas Card, and Noah A. Smith EMNLP 2019 |

code |

| Emergent coordination underlying learning to reach to grasp with a brain-machine interface with many authors 🙂 Journal of Neurophysiology |

2018

| Annotation Artifacts in Natural Language Inference Data Suchin Gururangan*, Swabha Swayamdipta*, Omer Levy, Roy Schwartz, Samuel Bowman, and Noah A. Smith *Equal contribution NAACL 2018 |

2014

| Analysis of Graph Invariants in Functional Neocortical Circuitry Reveals Generalized Features Common to Three Areas of Sensory Cortex Suchin Gururangan, Alex Sadovsky and Jason Maclean Plos Compbio 2014 |